On the sister blog I report on a new paper, “Don’t Get Duped: Fraud through Duplication in Public Opinion Surveys,” by Noble Kuriakose, a researcher at SurveyMonkey, and Michael Robbins, a researcher at Princeton and the University of Michigan, who gathered data from “1,008 national surveys with more than 1.2 million observations, collected over a period of 35 years covering 154 countries, territories or subregions.”

They did some forensics, looking for duplicate or near-duplicate records as a sign of faked data, and they estimate that something like 20% of the surveys they studied had “substantial data falsification via duplication.”

These were serious surveys such as Afrobarometer, Arab Barometer, Americas Barometer, International Social Survey, Pew Global Attitudes, Pew Religion Project, Sadat Chair, and World Values Survey. To the extent these surveys are faked in many countries, we should really be questioning what we think we know about public opinion in these many countries.

That is Andrew Gelman.

At quick glance, the paper’s approach to calling data “duplicated” is a bit crude, but I’ve worked with several of the survey firms that have produced these surveys in Africa. I have no trouble imagining that the data have very serious problems.

Of course, if 20% of the surveys have a 5% duplication problem, I don’t know that this makes them much worse than the other data scholars use. Researchers use national statistics or cross-national databases all the time as if the data are valid, while most are terrible. When I referee a paper, it’s obvious who knows whats under the data and who never bother to look, and simply download blindly.

But back to the surveys. Duplication is terrible, but the least of my worries. For instance:

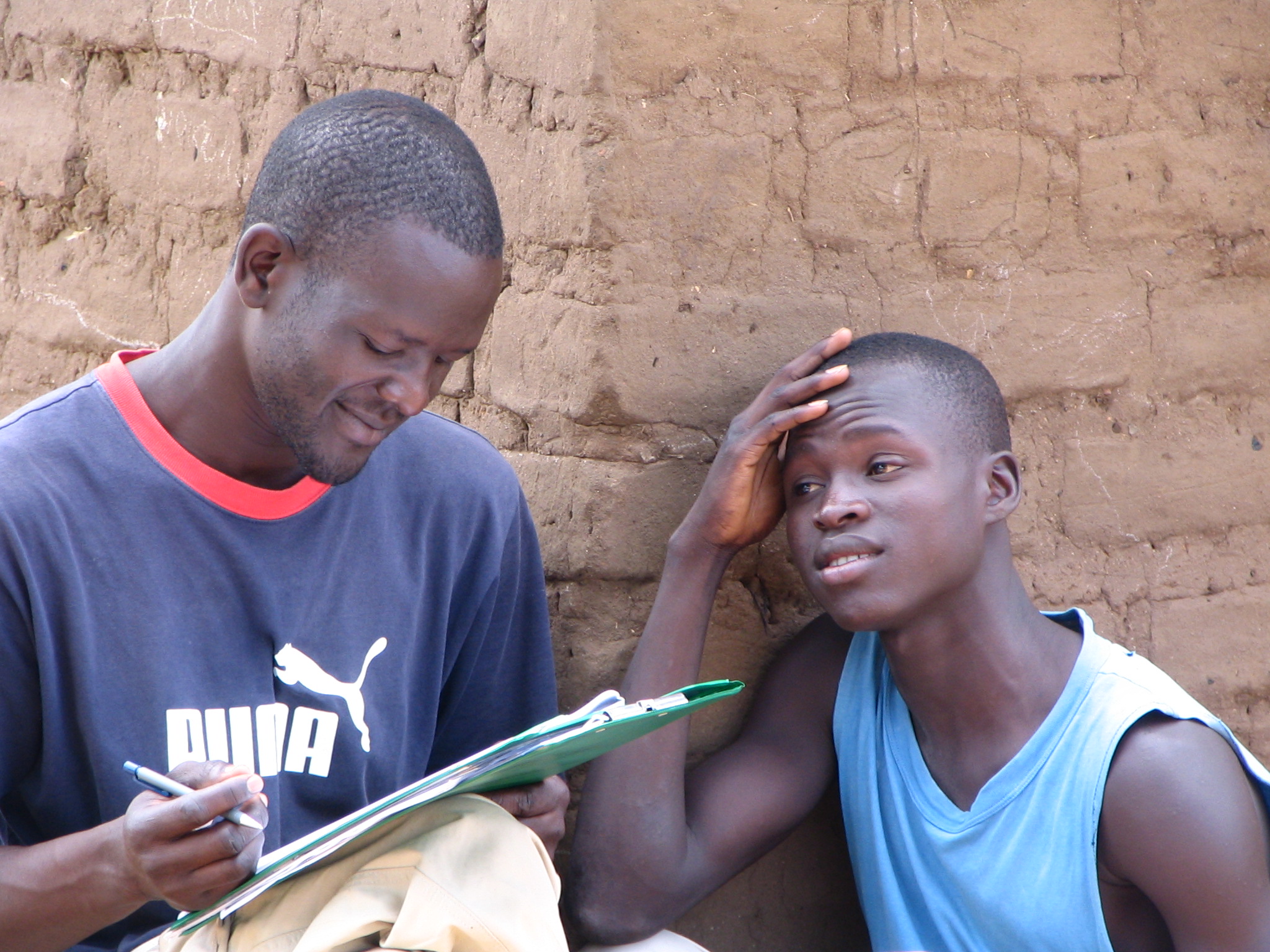

- The questions from most of these surveys sound perfectly sensible until you sit down and ask them to someone in a village. Then the absurdities become immediately apparent. Researchers: If you have a chance, print out any one of these surveys and test them out sometime. You will never operate the same again.

- Then there’s the poor quality of much of the legitimately-collected data from rushed, tired, poorly incentivized enumerators.

- Finally, these survey firms are for-profit enterprises with very different incentives and constraints than the researchers. They often have limited cash flow, middling middle management, and their average customer is a private firm or development agency that pays little attention to data quality.

If I want reliable data, mostly I do not use private survey firms. I hire and train teams myself (when I can through a local non-profit research organization or an international one like Innovations for Poverty Action). And if I must us a firm, I hire a researcher I trust to keep an eye on things full time. I recommend nothing else.

54 Responses

Your analysis is only as good as your data. Poor analysis of bad data is the worst (and way too common) https://t.co/2ug4ud2mWn

RT @felixhaass: How much of the data you download is made up? On survey quality and the use of survey firms https://t.co/FEwxgwxj6o h/t @cb…

RT @SIMLab: “If I want reliable data, I hire & train teams myself (when I can through a local or intl non-profit research org.” https://t.c…

“If I want reliable data, I hire & train teams myself (when I can through a local or intl non-profit research org.” https://t.co/mg09ubR3Dm

How much of the data you download is made up?: https://t.co/2AhkOn8q8s

“these surveys sound perfectly sensible until you sit down & ask them to someone in a village” the band marches on.. https://t.co/fM2wQJ6VtO

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

Not for the first time, @cblatts has a post that is simultaneously very good and pretty depressing https://t.co/r5Nr8HKBIj

How much of the data you download is made up? On survey quality and the use of survey firms https://t.co/FEwxgwxj6o h/t @cblatts

RT @RAVerBruggen: How much of the data you download is made up? https://t.co/3CqDt1BoNl

RT @freakonometrics: “Can you trust international surveys?” https://t.co/fofI0YwvQ2 and https://t.co/oTJthhmcfY see also @cblatts’s https:/…

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @freakonometrics: “Can you trust international surveys?” https://t.co/fofI0YwvQ2 and https://t.co/oTJthhmcfY see also @cblatts’s https:/…

“Can you trust international surveys?” https://t.co/fofI0YwvQ2 and https://t.co/oTJthhmcfY see also @cblatts’s https://t.co/UHG3qhh2Hz

#Marketing #MRX alert! 20% surveys w/ made-up data! Other issues cited here overblown – Fabrication is THE problem https://t.co/qxpQyzKf3H

“The questions from most of these surveys sound perfectly sensible until you sit down and ask them to someone in a village. Then the absurdities become immediately apparent. Researchers: If you have a chance…”

There’s always a chance. Even if you can’t go to a remote village, try the questions out on someone — I used to start with my late mother (Mom was a good critic of ambiguous language).

Pretesting questions is one of the cheapest and most efficient ways to improve the survey results.

RT @FlorianKrampe: How much of the data you download is made up? – @cblatts https://t.co/VmcCnak2gh

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

How much of the data you download is made up? – @cblatts https://t.co/VmcCnak2gh

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

ICYMI study estimates 20% of #surveys from 154 countries have 5% or more made up #data https://t.co/SXuMALaogs

https://t.co/3v6SiK1PER

How much of the data you download is made up? https://t.co/vQfVRBeitl

How much of the data you download is made up? https://t.co/SCjLzJT8dE

That’s funny. Data duplication is a source of error. Reaction? Comments and identical re tweets “How much of the data you download is made up?”

‘most of these surveys sound perfectly sensible until you sit down and ask them to someone in a village’ https://t.co/qjTnKCu7tF

@cblatts @GavinChait 12.8%

@RAVerBruggen @cblatts 95%

How much of the data you download is made up? https://t.co/3CqDt1BoNl

RT @brettkeller: no quality control w/o intensive time/skill investment >> How much of the data you download is made up? | @cblatts https:/…

no quality control w/o intensive time/skill investment >> How much of the data you download is made up? | @cblatts https://t.co/uHYTjmn7TT

How much of the data you download is made up? https://t.co/pFa3KmJLWY

RT @ValuesStudies: “Duplication is terrible, but the least of my worries.” @cblatts on data falsification in international surveys https://…

“Duplication is terrible, but the least of my worries.” @cblatts on data falsification in international surveys https://t.co/mPy7OS6cQT

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

How much of the data you download is made up? https://t.co/HZzlBLP3FU

How much of the data you download is made up? https://t.co/eqA12k0era

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

How much of the data you download is made up? https://t.co/SjwlEWeCXe

.@cblatts explores an important question – how much data from surveys is made up? https://t.co/6YUrrd2oKq

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

Especially as a consumer of secondary #data myself, a necessary reminder to always dig deeper. From @cblatts https://t.co/hp8IaW01tr

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1

RT @cblatts: How much of the data you download is made up? https://t.co/PuvQWLq3j1