Guest post by Jeff Mosenkis of Innovations for Poverty Action.

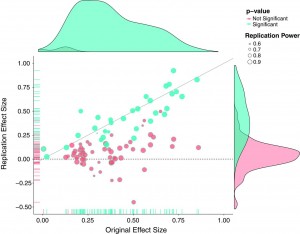

- A new paper in Science has results from a collaboration by 270 researchers through the efforts of the Center for Open Science to systematically reproduce 100 psychology studies (data & code here). As they put it:

47% of original effect sizes were in the 95% confidence interval of the replication effect size; 39% of effects were subjectively rated to have replicated the original result; and if no bias in original results is assumed, combining original and replication results left 68% with statistically significant effects.

- Headlines are shouting “fewer than half of psychology studies replicate,” but Vox’s reporter says after talking with one of the researchers about how hard it was to set up the identical experiment (in a different country/language), she was surprised anything replicates at all. Failure might not mean the original finding was a fluke, it could just mean they didn’t manage to measure the same thing the same way.

- A prominent researcher says the NYTimes misquoted him on this story to make him sound more skeptical than he is, and posted his own notes from the conversation, a great lesson for any researcher being quoted.

- Bruce Wydick has a interesting post on what academic and faith-based development practitioners can learn from each other.

- In the US, subsidies for people to move from poor neighborhoods to bettehr ones had positive long term outcomes (PDF) but Barnhardt, Field, & Pande find in India, taking people away from their social support networks is very disruptive and many people refused or left the program (PDF).

And a new visualization tool lets you play with data to show how moving data points (even one outlier) changes a correlation and variance overlap.