Unofficially, the WSJ reports that the next World Bank Chief Economist will be Paul Romer.

Romer made his career on growth theory, but most lately he is known for his push for Charter Cities. Here’s his web page and interesting blog. (I’ve been a skeptic, but I like Paul a lot, and we’ve had an interesting back-and-forth on the idea. See my discussion and critique from 2009, Romer replied, and I commented again.)

Meanwhile, the World Bank has become known in recent years first for it’s push for more micro data on poverty, and now for a gush of randomized control trials.

It sounds like this is something Romer might try to change. Today, Tyler Cowen pointed us to a recent blog post from Romer, that he called “Botox for Development”:

The x-ray shows a mass that is probably cancer, but we don’t have any good randomized clinical trials showing that your surgeon’s recommendation, operating to remove it, actually causes the remission that tends to follow. However, we do have an extremely clever clinical trial showing conclusively that Botox will make you look younger. So my recommendation is that you wait for some better studies before doing anything about the tumor but that I give you some Botox injections.”

If it were me, I’d get a new internist.

To be sure, researchers would always prefer data from randomized treatments if they were available instantly at zero cost. Unfortunately, randomization is not free. It is available at low or moderate cost for some treatments and at a prohibitively high cost for other potentially important treatments. Our goal should be to recommend treatments and policies that maximize the expected return, not to make the safest possible treatment and policy recommendations.

I agree and I don’t.

I agree that governments (and the development agencies that support them) have to focus on growth. And most of the policies that promote growth aren’t friendly to randomized trials. But they can be theory and evidence based, at least to some extent. Macroeconomics has a tendency to be an evidence-free zone, though, so we also need to be careful.

When I teach my political economy of development class, I always end on a few quotes. One is from Karl Popper:

The piecemeal engineer knows, like Socrates, how little he knows. He knows that we can learn only from our mistakes. Accordingly, he will make his way, step by step, carefully comparing the results expected with the results achieved, and always on the look-out for the unavoidable unwanted consequences of reform; and he will avoid undertaking reforms of a complexity and scope which make it impossible for him to disentangle causes and effects, and to know what he is really doing.

Such ‘piecemeal tinkering’ does not agree with the political temperament of many ‘activists’. Their programme, which too has been described as a programme of ‘social engineering’, may be called ‘holistic’ or ‘Utopian engineering’.

Holistic or Utopian social engineering, as opposed to piecemeal social engineering, is never of a ‘private’ but always of a ‘public’ character. It aims at remodelling the ‘whole of society’ in accordance with a definite plan or blueprint…

This is one of the things I appreciate about randomized trials, is the regimented process of piecemeal tinkering. Even when they do not run a rigorous trial, a lot of what governments and the Bank are doing is piecemeal tinkering. And the charitable view would say that randomized trials help sort out the bad from the good results after some period of tinkering.

The good trials aren’t just Botox. One example: The Bank encourages countries to borrow hundreds of millions of dollars a year for various employment and labor market projects. A lot of my research suggests that most of the recommended programs don’t work. That is a big problem, and randomized trials have been part of the solution.

Romer would probably agree. But his blog post highlights some places where we differ.

The thing that worries me about policies that “maximize the expected return”, and that don’t “make the safest possible treatment and policy recommendations” is that development agencies and governments have a long history of getting the big answers wrong. Really wrong.

The number one book I have my students read is James Ferguson’s The Anti-Politics Machine (actually I think most of them read the short article version). I also get them to read Nic van de Walle’s book on structural adjustment. Both highlight the grand and unexpected consequences of grand World Bank plans of the past.

A next favorite is Seeing Like a State by Jim Scott. On a bigger historical scale, it too chronicles our history of big plans with big failures. I want to get some hubris and risk aversion settled into my student’s bones.

That’s not to say I’m opposed to big plans or ideas. Paul Seabright has one of my favorite critiques of Jim Scott, and why scientific and state planning are perfectly effective in some cases. I just think any government, and every non-democratic development agency, ought to behave very cautiously and be very risk averse on behalf of the poor. It must make safe recommendations.

In any case, I welcome some new, big macro thinking at the World Bank. Alongside the piecemeal tinkering and randomized trials, I think it’s time for the Bank to do some innovative and more rigorous macro thinking beyond stabilization policies, improving the investment climate, and so on.

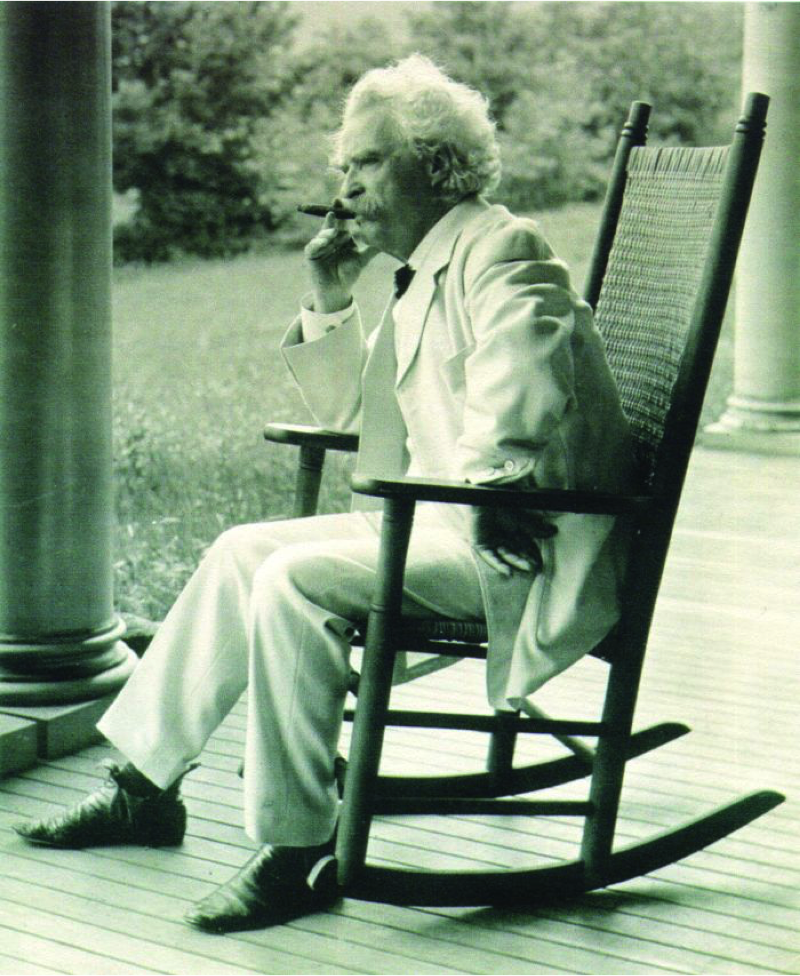

But I’ll leave you with the same quote I end my course on. It comes from Mark Twain: “It ain’t what you don’t know that gets you into trouble. It’s what you think you know that just ain’t so.”

152 Responses

A next favorite is Seeing Like a State by Jim Scott. On a bigger historical scale, it too chronicles our history of big plans with big failures. I want to get some hubris and risk aversion settled into my student’s bones.

golu dolls

golu dolls

nice & helping article.

Apple iPhone SE Review – 2016 lnkd.in/eZR_RKR

Lant. First of all, I clearly did say something different from Chris (perhaps I am interpreting “evidence” differently? I’m not sure), as your response proves. And you clearly do understand at least some of what I’m trying to say, as your response ably proves. So let’s try to have a productive conversation. I’m no expert in macro and would be happy to learn something.

If you want to define rigorous evidence as anything that rules out even a single [causal] model of the world, then I certainly can’t stop you (but that would be you pretending that definitions solve substantive issues). In that case, as you say, every piece of data (much less a regression result) is rigorous evidence, since it rules out an infinite number of models. Macro is full of rigorous evidence, as is phrenology. I am happy to admit that macro has facts that inform us about the world in useful ways, and that a subset of policymakers care about those facts. In fact that was the point of my post, trying to distinguish the two and at least give partial credit to macro.

So let’s not play with definitions; we can say more or less rigorous evidence, or we can define rigorous as “above the median level of rigor for economic analyses”, or we can forget the part about rigor and just ask whether macro analyses provide the same level of input into our overall subjective beliefs about how the world works as do micro analyses. In both cases our final beliefs depend on data, regressions, theory, conventional wisdom, peer effects, and many other things. My claim is that for a well-calibrated decision-maker (yes this is an ultimately subjective statement, but I doubt we differ much in our assessments, and I am happy to defer to you when we do), the regressions portion of that set of inputs (not the facts portion! only the part trying to provide evidence for the causal effects of policies – the empirical part that requires assumptions and a model) is given lower weight in macro than it is in micro. If you have macro examples where you would place especially high weight on the regression results compared to all the other inputs into your true subjective belief about the world, I am honestly interested in hearing about them.

To answer your final question, a VAR is evidence not data (as I have defined them); I don’t care whether one calls it rigorous or not; it tells us something about the world and should be used by policymakers; however it is generally not very convincing (in part because I feel quite confident that even in your mind it *doesn’t* in fact completely rule out all causal structures that are superficially incompatible with it) and therefore shouldn’t be given too much weight by the policymakers; and finally analogous analyses are more often convincing in micro. I hope this is now clear and well-defined enough for you to argue with :)

Julian, you are just repeating what Chris said and pretending substantive ideas are resolved by definitions: “macro has very few rigorous analyses of any kind” just depends on what you mean by “rigorous.” If by that you mean “completely resolves the causal structure to a unique model and parameter values” then yes, but that is not a widely accepted definition of “rigorous.” Simple OLS regressions produce rigorous evidence. If I regress weight on height (for a given population) that produces the conditional mean of weight for a given height. This relationship, if estimated well, is a piece of evidence about the world that any causal theory has to encompass. Now, is there more than one causal model consistent with that piece of evidence, yes of course, so one piece of rigorous evidence does not dictate a unique interpretation of the evidence. Take a legal case. Suppose it is established as a fact that the accused fingerprint is on the murder weapon. This is “rigorous evidence” about who is the guilty party. The defense may be able to construct a persuasive narrative that is consistent with both the rigorous evidence of the presence of the fingerprint and the defendant’s innocence but this doesn’t mean the fingerprint isn’t “rigorous evidence” as it does rule out lots and lots of possible states of the world (all those states of the world in which the defendant’s fingerprint is not on the murder weapon). So, suppose I do a VAR. That VAR does not map into a unique causal structure of the macroeconomy but it does rule out all causal structures incapable of generating those VAR results. Is a VAR “data” and not “evidence”? Is a VAR not “rigorous analysis”? I really don’t understand.

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/YTz4bsvccV

How can you say stuff like “Macroeconomics has a tendency to be an evidence-free zone, though, so we also need to be careful.”? Every single major macroeconomic policy debate since the 1980s has been fought out with tons and tons of evidence. Central Banks collect and analyze data in a real time, policy relevant way all the time. Either this statement is just a circular definition of “evidence” to mean “randomization” or else I am not clear on what evidence you would make the claim macroeconomics is evidence free.

Lant – although Chris was definitely in blog hyperbole mode, I do think there’s a big difference between data and evidence, and that the distinction is particularly acute for macro. One doesn’t have to be limited to randomization to think that macro has very few rigorous analyses of any kind (not their fault of course). This was obvious to me as an econ newbie when I first went to grad school, way before RCTs were in vogue. And it was equally obvious to me during my 3.5 years working at a central bank.

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

(1/4) @cblatts, as always, thought-provoking: RCTs=”regimented process of piecemeal tinkering” (ie experimentation) https://t.co/oXaG2rgHpI

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @henryoverman: randomized trials: “a regimented process of piecemeal tinkering” https://t.co/UjXPTkBkEZ @cblatts on World Bank, Romer &…

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @drunkeynesian: Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/YgJGVZ6V0e

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/j7krcQCgk3

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/rFYPJ8H3ZT

Paul Romer and “the gush of randomized control trials” at the world bank. https://t.co/rdWnQDdzvg #RCT #Econometrics

RT @nyhetsbubbla: Paul Romer ny chefsekonom på världsbanken, känd som förespråkare av privata städer och ekonomiska frizoner https://t.co/C…

RT @nyhetsbubbla: Paul Romer ny chefsekonom på världsbanken, känd som förespråkare av privata städer och ekonomiska frizoner https://t.co/C…

Paul Romer ny chefsekonom på världsbanken, känd som förespråkare av privata städer och ekonomiska frizoner https://t.co/ClV0rxC0Y5

RT @dgomezco: Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? – Chris Blattman https://t.co/Hj50…

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities?

Un repaso de @cblatts.

https://t.co/hoszlfXxVu

Time for #wb to do innovative + rigorous macro thinking beyond stabilization policies + improving investment climate https://t.co/ki5OtpC4Kp

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? – Chris Blattman https://t.co/YCvwGDNp5P

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/hJ9bLMDhB7

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

https://t.co/7QhiFfm2o0

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

.@cblatts or can he hopefully end the unproductive strictly either/or RCT drama with more sane stance of diff methods for diff Q’s?

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

And in case he doesn’t get far in reforming randomista, at least he can have more researchers in the home country especially developing countries rather than D.C. when they are informing respective country policies! It is high time that researchers stop living in D.C. to inform developing countries development policy!

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/9e151qsGyI

RT @henryoverman: randomized trials: “a regimented process of piecemeal tinkering” https://t.co/UjXPTkBkEZ @cblatts on World Bank, Romer &…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

@cblatts Nice post(as usual), but my answer to the question is: of course not!

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

How will Romer’s charter cities kick effect @WorldBank development research? @cblatts on WB’s new chief economist https://t.co/sTzzs1Q7ke

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

Ironically your Twain quote is something you think you know but don’t actually :) The sentiment is due to Josh Billings instead:

http://quoteinvestigator.com/2015/05/30/better-know/

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/ejmAfD0zRG

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/8xUhypYUPy

RT @Noahpinion: Does development policy need more randomized trials, or fewer? https://t.co/3FTtcKkwrJ

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/U3fwxcgr3x

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @drunkeynesian: Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/YgJGVZ6V0e

RT @drunkeynesian: Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/YgJGVZ6V0e

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/YgJGVZ6V0e

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

Mmm… https://t.co/S5QV7c9rbQ

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/rtvgDX5Wy7

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/w2k00MjBrX

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/S53emeZUFq

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

@cblatts appropriately enough, twain quote is misattributed (I used to attribute to him myself) https://t.co/PJjNwbc9VE

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/pmpDI54Fv0

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? – Chris Blattman https://t.co/Hj50LpAHkx

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? -… https://t.co/cpv4o3pHPp

@cblatts

Re discovering knowledge that is useful for billions, “it doesn’t matter what color the cat is, as long as it catches the mouse.”

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/ZRRbr7Dj7f @cblatts

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

RT @bill_easterly: Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

@bill_easterly @cblatts Or for Charter Cities? https://t.co/0sFWpwwmIf

Will new World Bank Chief Economist Paul Romer fight against RCTs? https://t.co/69IiOfOd0a

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/ygHv74My4C Nice read

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? – Chris Blattman https://t.co/zFztzWExr3

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/Y2P35K7MfU

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

Hey @YohannaLoucheur @IsabelleRoy12: Will Paul Romer get WB out of the randomista business and into Charter Cities? https://t.co/RM8KXAQn2n

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

Will Paul Romer get the World Bank out of the randomista business and into Charter Cities? https://t.co/x6Y9yepel7

@cblatts I don’t think Kaushik was a big RCT fan, either. Good points, but not too worried for my randomista Bank friends.

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…

RT @cblatts: The World Bank has a surprising (and good) new Chief Economist. But will he end the randomista movement? https://t.co/q42Nh8V3…