Why We Fight: The Roots of War and the Paths to Peace

An acclaimed expert on violence and seasoned peacebuilder explains the five reasons why conflict (rarely) blooms into war, and how to interrupt that deadly process

Presentation to the Joint Chiefs Operations Directorate

When is War Justified?

Conversation with Teny Gross on Gang Violence

Why are some people and societies poor, violent, and oppressive? What leads people into poverty, violence, and crime? What events and interventions lead them out?

Chris Blattman is an economist and political scientist who uses field work and statistics to study poverty, political engagement, the causes and consequences of violence, and policy in developing countries. He is a professor in the Harris School of Public Policy at the University of Chicago.

CV | Google Scholar | UChicago Page | Contact

Popular press coverage

Spectator picks WWF as a best book of 2022

Journalist and foreign correspondent Michela Wrong chooses Why We Fight

Vox FuturePerfect50

Profile: Economist Chris Blattman has reshaped our understanding of violence and poverty

Predicting and preventing violent crime before it happens

NPR’s Hidden Brain podcast on violence from Monrovia to Chicago, and an amazing new way to stop it

Behavioral Scientist: Summer Book List 2022

Behavioral Scientist recommends Why We FIght

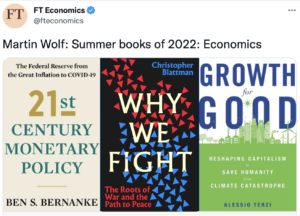

Financial Times: A best summer book for 2022

Martin Wolf selects his best mid-year reads in economics

My popular writing

Cyber Warfare Is Getting Real: The risk of escalation from cyberattacks has never been greater—or the pursuit of peace more complicated

My piece in Wired

The Hard Truth About Long Wars: Why the Conflict in Ukraine Won’t End Anytime Soon

My Foreign Affairs piece on how ideals and ideology explain so many long wars, including this one

The roots of war: To discern why we fight, we should ask why we do not

Book excerpt in the Boston Review

If we elected more women would there be less war? Yes but not for the reasons you think

Opinion piece in Newsweek